One of the more glorious moments of my not especially glorious medical career was that I was, quite accidentally, instrumental in catalyzing a medical conference on prostate cancer screening in our town. Here’s how it happened.

I saw an article in our local paper extolling the virtues of private prostate cancer screening: “One simple test can save a dreadful death” and so on. The article was initiated by our local urology consultant. It only really gained my attention, being an NHS rather than a private GP, because just a few weeks beforehand the local hospitals had sent out a circular telling us not to refer patients to the urologists for prostate screening under the NHS because it was of no preventative value. The catch was that the consultant recommending the private service was also an NHS urologist.

So I wrote to him, suggesting that if screening was worthwhile for paying patients, it was for my NHS patients too, but if it wasn’t of value to the latter (as per the guidance we’d been sent) then he ought not to be recommending it to the worried wealthy (a good number of whom were also my NHS registered patients).

Now, to be fair, it became clear that his praxis was not so much intended to make a fast buck, but because he actually disagreed with the local policy, and on the kind of grounds you’d expect from a simple surgeon with the venerable professional motto, “In Dubio Exsece” (If in doubt, cut it out). Prostate cancer kills 11,000 UK citizens a year. PSA blood tests are easy, prostate biopsies stock-in-trade, and early radical treatment by surgery or radiotherapy can more or guarantee eradication of early disease (actually it can’t, but that wasn’t known then).

Meanwhile, though, I’d been looking at the NHS screening authority website, and had fired off some e-mails questioning their far less enthusiastic embracing of screening. It was only after about the third reply to my rather belligerent enquiries that I realised the person replying was Professor Sir Muir Gray, then head of the UK NHS screening service. He taught me a thing or two about the epidemiology of prostate cancer, and of screening in general.

Now unless you’re Sy Garte (whose portfolio includes epidemiology), it’s likely that the situation regarding screening seems as simple to you as it did to my urology colleague. “Seek, and strike!” But it’s an actually extremely complex issue, and there are in reality very few real situations in which screening is unequivocally a good thing. You need a disease whose outcomes are predictably bad, whose treatment is safe and effective, and whose early detection is also safe and virtually infallible. Things fall apart very quickly if any one of those falls short.

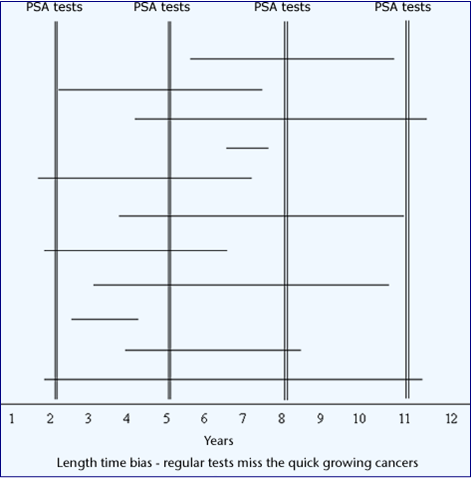

In prostate cancer as it was at the turn of the millennium, none of those were true. PSA blood tests are notoriously guilty of false positives and negatives. Prostate cancer is near-universal in elderly men and compatible with long life, and is only unpredictably aggressive. The radical treatment itself has radical side effects like permanent incontinence and inevitable impotence – and a significant mortality.

The net result is that screening may give false security; or raise awareness and anxiety years early about a disease that would have never caused problems, or which will kill you anyway; or treat what would have been a benign condition with treatment that maims or kills you.

And so it all led to an interesting evening discussion, and given the uncertain state of the science, a change of local policy to “discuss the issues with NHS patients seeking screening and refer them if you and they agree.” That, in fact, was miserable advice as far as I was concerned, as it is not possible to teach lay people, who suspect you’re trying to save money anyway, epidemiology in a 15 minute NHS consultation. One cancer screening request, and you’re running an hour late (probably with a bad temper because he wouldn’t be educated).

Now fifteen years later, despite data from some long-expected large trials, prostate screening is still controversial. And last week came this article, which doubts the validity of any existing cancer screening. This suggests that the epidemiological problems are still as intractable as ever, and not only about prostate screening. Mammography screening for breast cancer was finally introduced here in 1988, but I remember observing a conference at Westminster Hospital way back in 1976, in which the greatest experts in breast cancer tossed the pros and cons back and forth and, in general, considered it was not likely to be a great help. Spokespersons in the Indy article say it’s saved many lives, the research articles says it’s not that simple.

Now, as the Indy itself indicates, this one paper has many detractors, and may well be wrong in its conclusions about particular, or even all, existing screening porogrammes. Or it may be right. The point to me is that, many decades after such programmes were instituted across the world – involving probably billions of patients and huge budgets both for operation and research – it’s still possible for leading scientists to argue from the data that it’s been a waste of resources and may even have caused harm overall. And for others, equally eminent, to argue the opposite.

That, I found over a career in medicine, is the general truth about medical research: human beings and their ailments, in their natural setting – which is where we have to do medicine – are just so complicated that the scientific goal of reducing the issues to simple, “evidence-based” outcomes is doomed to result in diametric reversal of “best practice” every few years. Witness other big news stories like the dietary causes of cardiovascular disease, the safe limits for this and that social drug, and so on.

This is the case even in statistical fields like epidemiology (where it’s legitimate to institute policies that benefit the greatest number despite widespread exceptions). It’s much more so when one is dealing with individual human beings, every one of whom steadfastly refuses to behave as an average particle in some medical Newtonian Universe.

Now I’d be the first to say that evidence-based management is far better than voodoo-based management or guesswork. My point is rather one I’ve made a few times recently, which is that the kind of science done in medical trials is just one way, amongst others including the nous of long professional experience, of approaching useful truths. It is not the universal panacea for ignorance that too many influential people in my profession, and in government, believe for ideological reasons (scientism being, ultimately, the ideology).

Thomas Kuhn said more or less the same thing of science in general, and I guess he mostly had in mind fundamental sciences like physics and chemistry. How much more true it is for situations outside the tightly controlled simplicity of a laboratory.

So should you middle-aged men go for your prostate screening? Sure thing – once you’ve signed up for the course on epidemiology.

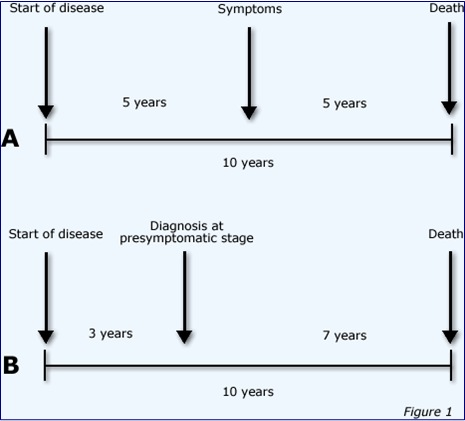

Hazards of screening #1: Lead time bias. You discover something earlier, but make no difference (except in raining anxiety and giving more treatment)

Well done.

The message may be getting through.

Patients sometimes finish my sentence for me when I start to say, “Most men die with prostate cancer but not of prostate cancer”.

As to the article ‘Cancer screening has never been shown to save lives’, surely that depends on which cancer and which screening tool.

For example, I understand that screening for the CDH1 gene in relatives of people with gastric cancer may save the lives of those who are ‘gene-positive’ and elect for prophylactic gastrectomy.

Also, although I haven’t seen recent outcomes for FOB screening for bowel cancer in the 60-74 yrs old (but the pilots looked promising) I’d be surprised if life expectancy wasn’t improved.

Whatever happened to Wilson’s criteria, which includes the criterion ‘treatment should be more effective if started early’?

Peter

You’re still practising, and I’m not, so I’m out of touch! Nice to know that public knowledge has begun to move on.

I think, though, the original article was referring to public screening programmes, rather than targeted at-risk groups, which it doesn’t mention. If you can find the link to the original article (free!), it adds some useful detail, including something on FOB screening.

Certainly, however it came across in the news item, the main thrust was not that screening never prevents the disease screened, but that when studies include mortality from other causes, things look less good, and in some studies worse. One point made was that to do adequate work on this would cost billions, and so it’s not often done, especially once screening is up and running routinely.

Such arguments might, in theory, even apply to targeted screening such as CDH1, if one takes into account what proportion of gene-positive people are destined to get gastric cancer, and the undoubted morbidity and mortality of gastrectomy, and associated stress. But I know nothing of the figures for that.

A couple of current news items with some relevance:

Genetic cancer screening would detect illness with a blood test

http://www.nature.com/news/satirical-paper-puts-evidence-based-medicine-in-the-spotlight-1.19133

Satirical paper puts evidence-based medicine in the spotlight

http://www.nature.com/news/satirical-paper-puts-evidence-based-medicine-in-the-spotlight-1.19133

Preston – looks like one of your links got duplicated. Post a correction and I’ll edit it and tidy up.

The spoof one jogs my fading memory into recalling that the first article I had published in the then-respected journal World Medicine, back in 1981, was a comparable spoof, only drawing on real sources. For the inquisitive, here.

This article has some good explanation of the spoof by the author, Dr. Mark Tonelli:

In a different context to mine he makes the same kind of “limitations of science” points that I do. It’s actually quite an ideological war – there was enormous professional and financial pressure in my day to conform to “evidenced based” guidelines, even though in many cases one could see the kinds of bias and inappropriate quantification going on which he highlights.

I noted that the first comment after Preston’s link echoes the “data is all” philosophy one sees in some TEs:

It sounds rigorous and obvious, until you realise it’s just saying “even the unquantifiable must be quantified”.

Sorry for the inability to copy and paste. Here’s the link that was actually more relevant:

Cancer blood test venture faces technical hurdles

http://www.nature.com/news/cancer-blood-test-venture-faces-technical-hurdles-1.19152

Ah, that’s better – I’ll leave the thread as it is.

I’m not quite clear from that latter article whether “tumour specific DNA” indicates “a marker from a pathology yet to become clinically manifest” or “a sure-fire predictor that one of these days a particular cancer will arise.”

The latter sounds particularly problematic, in terms of validation, for mass screening. But even the former carries the same problems as existing screening: remove a bowel, or a breast, or whatever, on the basis of a proxy for disease, even if every kind of test on the removed organ proves negative.

Is it not a perpetuation of the myth of “the gene for X” that has plagued us for a couple of decades or more? (Where “X” = homosexuality, religion, intelligence etc.) The problem, then, might be less technical and more conceptual.

I used to have a photocopy of a spoof article that was pointed out to us when I was in med school. It was intended as an example of how not to write a case study. The title was something like “The benefits of chicken soup therapy in management of the common cold.”

But doesn’t everybody know that chicken soup is superior to placebo in the common cold???