In the batch of articles I’ve done on “chance” over the last month or so, my main target has been the only kind of “chance” that makes much sense in an atheistic framework, and that is what I have called “Epicurean chance”. The basic concept of this is that totally undirected events can lead to order that, otherwise, would demand the designing intention of a purposeful being. Epicureanism has been a philosophically dubious claim ever since Democritus suggested it four centuries before Christ.

Perhaps I should now deal with another “flavour” of chance that appears to underlie many Evolutionary Creationists’ idea of the randomness that “God uses”, and which is probably also assumed by most materialist scientists with their habitual lack of metaphysical reflection on their working principles.

This is a kind of “ordered chance” whose outcomes are not entirely chaotic as in the “Epicurean” version (delivering Marble Arch or Christmas unpredictably) but, as one poster on BioLogos put it, “governed by a probability distribution”. The probability distribution then becomes a natural quasi-law which, I suppose, enables one to say that God creates admirable order in the universe, but with the advantage that he leaves it some “spontaneity” or “freedom”, thus avoiding strict determinism.

Leaving aside the open question of why such a “fuzzy determinist” universe should be advantageous over one that God simply makes (to speak, of course, analogously), it is hampered by the fact that, as I shall endeavour to show, even this kind of chance is not, actually, a true cause at all, any more than is Epicurean chance. Chance still only means “epistemological randomness” – ie our ignorance of actual causes. Chance causes nothing, and probability causes nothing. Ever.

But before attempting to demonstrate this, let me point out how it has slipped in to dominate even the secular science discussion, by a Monod-influenced quote which I also used in another recent post:

The Way of the Cell, published last year by Oxford University Press, and authored by Colorado State University biochemist Franklin Harold, who writes, “We should reject, as a matter of principle, the substitution of intelligent design for the dialogue of chance and necessity (Behe 1996); but we must concede that there are presently no detailed Darwinian accounts of the evolution of any biochemical system, only a variety of wishful speculations.” (my emphasis)

Now, if there is an intelligent designer, design is a real cause. If not (and Harold excludes it on some unstated principle), then the substitutes he proposes are chance (which I argue cannot be a real cause) and necessity which, in itself, is not a cause either, until cashed out in specific laws that are themselves not the product of necessity, but either of design (excluded) or of chance (still not a cause).To quote Ed Feser on this:

Chance presupposes a background of causal factors which themselves neither have anything to do with chance nor can plausibly be accounted for without reference to final causality, so that it would be incoherent to suppose that an appeal to chance might somehow eliminate the need to appeal to final causality.

One must add that how any such laws can possibly impose themselves causally on matter is a matter of utter mystery.

All Harold has done, therefore, and presumably Monod before him, is to profess ignorance of the causes of evolution and propose a non-cause as if it were an explanation. He has rejected one cause (God) and replaced it with two non-causes (chance and necessity). This may be enough to satisfy some people’s curiosity about how the world works, but not mine – as the old joke says, you cannot substitute a wit by two halfwits.

If, as I suspect, Harold has the idea that the science of statistics has shown such chance to be orderly in the sense of “predictable probablistically”, then even if it were a true cause, it would require an explanation for its order, either from Epicurean chance or by the banished designer imposing the order. But in fact, “chance” even of this kind is not a true scientific (ie efficient material) cause. The house is built on sand. And that’s before you try to explain why non-material universal laws of any sort should be binding on matter! Let’s seek to demonstrate.

Let’s start with a simple “random system”, a coin toss. This is easily idealised as having a 50-50 probability distribution (though empirically it hardly ever does, any more than male and female births will be exactly equal simply because there are only two alternatives). If we wish to see how much the probability distribution influences or governs the outcomes, we can experimentally simply control all the other probable causative factors such as initial conditions, magnitude of toss and so on, leaving only the effect of the probability. This has actually been done, as this paper shows, by building a coin-tossing machine. If set up carefully, it is found that such a machine will produce the same result every time.

Let’s start with a simple “random system”, a coin toss. This is easily idealised as having a 50-50 probability distribution (though empirically it hardly ever does, any more than male and female births will be exactly equal simply because there are only two alternatives). If we wish to see how much the probability distribution influences or governs the outcomes, we can experimentally simply control all the other probable causative factors such as initial conditions, magnitude of toss and so on, leaving only the effect of the probability. This has actually been done, as this paper shows, by building a coin-tossing machine. If set up carefully, it is found that such a machine will produce the same result every time.

In other words, when all other known factors are eliminated, the probability distribution (50:50) has absolutely zero effect on the outcomes (100:0). On the contrary, it is the design of the system and the variation in physical causes that dictates the probability function. And when we eliminated those variations, we created an entirely new probability function. Chance was eliminated as a true cause – as if that’s surprising.

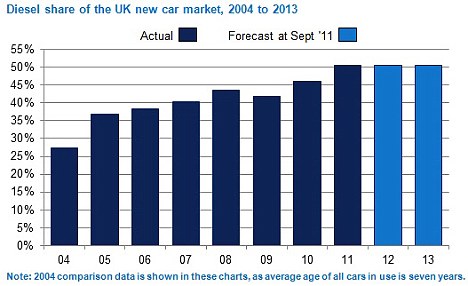

If instead of coin-tosses we take as an example some sociological survey, the impotence of probability as a cause is even clearer. The data in this figure suggests I stood a 25% chance of buying a diesel car in 2004, and a 50% chance if I bought one in 2011. There is a trend over time, and presumably a stage magician would have been able to predict I had a petrol car more reliably at the earlier date. But it’s blatantly obvious that the probability causes nothing whatsoever, but simply reflects individual choices (true causes) based on propaganda about fuel economy and reduced pollution, and cheaper Road Tax. In fact, since then new scares about diesel particulates, high diesel costs and the scandal over car-manufacturers’ economy figures have led to a dip in the last two years’ diesel sales, by influencing those choices: the probability distribution had no such influence – once again, probability is not a cause.

If instead of coin-tosses we take as an example some sociological survey, the impotence of probability as a cause is even clearer. The data in this figure suggests I stood a 25% chance of buying a diesel car in 2004, and a 50% chance if I bought one in 2011. There is a trend over time, and presumably a stage magician would have been able to predict I had a petrol car more reliably at the earlier date. But it’s blatantly obvious that the probability causes nothing whatsoever, but simply reflects individual choices (true causes) based on propaganda about fuel economy and reduced pollution, and cheaper Road Tax. In fact, since then new scares about diesel particulates, high diesel costs and the scandal over car-manufacturers’ economy figures have led to a dip in the last two years’ diesel sales, by influencing those choices: the probability distribution had no such influence – once again, probability is not a cause.

In our first example, then, physical conditions, and not chance, caused the observed distribution. In our second, it was all about intelligent (if sometimes misinformed) human choices, not chance.

At this point, let’s mention the classic example of a “truly random” phenomenon – the quantum event, “governed” statistically by some of the most precise probability distributions in science, yet totally unpredictable individually. The demonstration that “hidden local variables” almost certainly do not exist in this situation has led some to say that quantum events are “uncaused”, which is actually a confusion of “Epicurean randomness” (stuff just happens) with the “soft chance” we are considering here, which is an orderly nature producing a predictable probability curve. Perhaps, though, given the lack of known causes, quantum events are one instance in which we can say that chance is a truly scientific efficient cause in its own right?

At this point, let’s mention the classic example of a “truly random” phenomenon – the quantum event, “governed” statistically by some of the most precise probability distributions in science, yet totally unpredictable individually. The demonstration that “hidden local variables” almost certainly do not exist in this situation has led some to say that quantum events are “uncaused”, which is actually a confusion of “Epicurean randomness” (stuff just happens) with the “soft chance” we are considering here, which is an orderly nature producing a predictable probability curve. Perhaps, though, given the lack of known causes, quantum events are one instance in which we can say that chance is a truly scientific efficient cause in its own right?

Well sadly, we can’t, for the simple reason that whatever leads to individual quantum events, according to Bell’s theorem it is not a material efficient cause – and it is therefore beyond the purview of science altogether. “Causes unknown” is all we can say as scientists. Speaking as philosophers, though, since our other probability distributions have all been the effects of true causes, either physical or intelligent, then it is more plausible that the (unknowable) causes of quantum events lead to quantum statistics, than that the probabilities cause the events by some occult mathematical influence.

Now consider a medical situation, loosely based on the discovery a while back that some classes of first line antihypertensives are far less effective in black patients than in whites. The realisation of this was slow, partly because most drug trials were done on white volunteers in western countries. But imagine that initial trials had been done in black Africa, for cheapness perhaps. Imagine a new antihypertensive drug turning out to be ineffective compared to placebo in such a study: the rare instances of improvement would be put down as anomalies due to chance, and the drug never marketed for blood pressure.

Now consider a medical situation, loosely based on the discovery a while back that some classes of first line antihypertensives are far less effective in black patients than in whites. The realisation of this was slow, partly because most drug trials were done on white volunteers in western countries. But imagine that initial trials had been done in black Africa, for cheapness perhaps. Imagine a new antihypertensive drug turning out to be ineffective compared to placebo in such a study: the rare instances of improvement would be put down as anomalies due to chance, and the drug never marketed for blood pressure.

Maybe years later, when the drug has become widespread in some other use (treating migraine, perhaps), someone notices a modest effect on lowering of blood pressure across US (all races) patient populations. Once new trials are conducted, deliberately or accidentally, on whites, the gains are suddenly seen to be statistically very significant. Now, this is an example of “sampling error”, in that a very strong causal relationship between the drug and the therapeutic effect was partly masked in a mixed population, and almost entirely obscured in the original black subjects.

Perhaps further research might even show that the rare anomalous improvements in the first studies were because the beneficiaries carried an allele like that of whites, owing to mixed ancestry. Genetic targeting of drugs is now becoming more common, and on far less crude criteria than that of race.

My point is that when one is dealing with real causes one doesn’t understand (in this case, how the drug actually works, and the fact that it has vastly different effects in different subjects), one has no warrant for saying that an effect is due to chance. There is one set of true reasons for failure, and a quite different set for success.

Now consider this in relation to the standard theory of evolution, which says that mutations (of various sorts) that are random with respect to fitness (and possibly random in themselves, from “chance” copying errors and so on) produce the variation on which natural selection can act. Such random variations also account for the drift of neutral theory.

This notion of the randomness of variations is bolstered by the probability distributions they obey, from which predictions can be made, about molecular clocks and so on. But we have seen in several cases now that the idea of “obeying” probabilities is putting the cart before the horse: the probability functions are actually the effect of real, but unknown and probably multifarious, causes, which our ignorance forces us to dump in a single black box called “chance”.

The only way we can conclude that variations are random with respect to fitness is by treating all mutations as one single type of cause, and making the tacit assumption that if evolutionary processes, or God, were aiming at “fitness”, all or most mutations would produce innovations, better survival and so on. As it is, most change is near-neutral, much deleterious, and the rare highly beneficial mutations, from which we must assume come the big phenotypic changes seen in macroevolution, must be entirely fortuitous. After all, why would God (or a natural but teleological evolutionary process) produce all those errors and disasters and so few creative solutions?

Truly beneficial mutations (as opposed to the mildly benefical near-neutral sort) are indeed rare – most sources I’ve read suggest the percentage amongst all mutations is too low to measure. But then in our medical example the right allele was so rare in black people that benefit was wrongly attributed to “chance”, rather than the correct specific cause of “a suitable drug receptor”.

I’ve already shown that “chance” is always a non-explanation, and that mutations may have all kinds of true causes. Just suppose that the vast majority of them occur for quite mundane physical reasons, such as failure of error-correction, ionising radiation, and similar causes. Purifying selection weeds out the seriously deleterious, genetic drift is sufficiently indifferent to survival to be tolerated, and microevolution creeps slowly in one direction or oscillates around a mean, according to the particular theory you hold. Life carries on routinely in this basis, just as it does amidst parasitism, predation and inclement weather. Theologically, such things are not divorced from providence, but can be seen as part of general providence rather than creative special providence.

But suppose also that a rare, and entirely separate, class of change (too rare to register in the probability distributions) is the direct result of the providential creative activity of God. Scientifically we could call it “beneficial mutation”: theologically we could call it creatio continua. If such were the case, it would be as mistaken to say such mutations were “random with respect to fitness” as it would be to say that the rare benefit of our antihypertensive to certain black patients was “random”. In the latter case, the effect was caused by a particular gene, and in the former the effect of evolutionary innovation was a slam-dunk result of a precisely targeted mutation. In both cases all we have done is sort the signal from the noise of miscellaneous processes wrongly attributed to “randomness”.

Although I’ve put this in a different way from the way he would have put it (because the myth of probability as a scientific cause was much less prevalent in his day), Asa Gray shows well how such divine input can work smoothly against the background of natural processes including adaptive selection:

The origination of the improvements, and the successive adaptations to meet new conditions or subserve other ends, are what answer to the supernatural, and therefore remain inexplicable. As to bringing them into use, though wisdom foresees the result, the circumstances and the natural competition will take care of that, in the long-run. The old ones will go out of use fast enough, except where an old and simple machine remains still best adapted to a particular purpose or condition — as, for instance the old Newcomen engine for pumping out coal-pits. If there’s a Divinity that shapes these ends, the whole is intelligible and reasonable; otherwise, not.

Which is only to say that if God creates something better fitted for its role, it will survive in the natural world because creation is good. It’s not just that such a divine role in evolution is possible – it’s that once one dethrones “chance” as a true cause with almost mythic occult powers, it’s utterly necessary to explain where innovation comes from by some true cause operating in real time.

The coin toss showed that variation came from varying causes – ultimately all due to (inexact) human volition. Factor that out, and the coin toss became predictably unchanging and lawlike. In the diesel survey variation also came from human choice, and never became lawlike. In quantum events, the cause was outside science, and might as well be due to divine choice as any other non-material cause (if, indeed, the role of human mind is not involved in wave-function collapse, as many believe it is). The drug-trial variations were due to genetics – but even that involved a history of human choices about migration and marriage.

So in these examples at least, quite a lot of “chance” is actually “choice”, so that divine creative choice would be a plausible contender for the positive progress of evolution – were it not excluded as a matter of principle.

The alternative, with “chance” exposed as a non-cause, is to show how the simple mathematical abstractions of regularity we call “physical laws” could produce “by necessity” the extraordinarily complex, ordered and beautiful irregularities we call “living things”. As Norman Swartz, in the IEP says:

Twentieth-century Necessitarianism has dropped God from its picture of the world. Physical necessity has assumed God’s role: the universe conforms to (the dictates of? / the secret, hidden, force of? / the inexplicable mystical power of?) physical laws. God does not ‘drive’ the universe; physical laws do.

But how? How could such a thing be possible? The very posit lies beyond (far beyond) the ability of science to uncover. It is the transmuted remnant of a supernatural theory…

It will be worth exploring how philosophically shaky the idea of natural laws as necessitating entities is in another post, because perhaps few of us are aware it is even controversial. But then, few of us are aware of the huge problems in the concept of “chance” as a cause, either.

Your dethroning chance as a cause continues to fascinate me and rings true as well. We usually think of those things we’ve labeled as “laws” as being a sufficient account of some kind of cause even if the presence of the law itself is not an explanation (as the Davies quote at the end points out.) E.g. if something falls off the table, we are generally content to chalk that up to the law of gravity and to be satisfied (at least most of us –if we aren’t busy pressing further into Einstienian space-times or other super-laws behind ‘gravity’) with the sufficiency of simply knowing that unsupported things fall. (Echoes of Aristotle in our tendencies to harbor simplifying predilections?). But even here I guess, ‘gravity’ (or curved space-time) isn’t so much a cause as just another observation.

The “law” I keep circling back to with your critiques is the 2nd law of thermodynamics. That comes closest, I should think, to promoting chance up onto a causal throne –and yet it can fare no better against your line of reasoning. A few years ago I would have had no problems stating that “bald chance ensures that my next few breaths will have a sufficient number of oxygen molecules mixed in with all the N2 so that I will not be left suffocating.” The 2nd law would, on ordinary language, account for our self-assurance that all the O2 molecules don’t end up randomly congregated on the far half of the room from where I am leaving me in dire straits. But on your telling, the 2nd law causes nothing at all and is merely our observation for how things just ordinarily or providentially work. And I’m fine with that. But it does seem to me that it forces us onto one of two paths: one being that we don’t really know the real cause of anything in the pure kind of sense (the high bar you set for ‘randomness’ that it then fails to clear), or two: that we just devolve to (or remain in) our sullied pools of epistemological semantics where we pragmatically speak of nearly anything as being a cause (from ‘gravity’ to ‘randomness’).

Merry Christmas and Happy new year, by the way! I’m particularly glad for the Hump right now since my other haunt at Biologos is rightfully shut off for the holidays. (But I am always glad for the Hump in any circumstances!)

Happy New Year tomorrow, Merv!

Interesting that you mention the 2nd law of thermodynamics, because looking for graphics for the OP I kept coming across an irrelevant, I thought, quote by Arthur Eddington:

But in The Nature of the Physical World he says a lot about the oddness, and almost mysticism, of the second law, “that incongruous mixture of theology and statistics known as the second law of thermodynamics”, mainly because it depends on a subjective, teleological assessment of what is more ordered and what is less ordered. Mathematically, like the other laws it is completely reversible as to cause and effect, and to the “arrow of time”. Isn’t it odd that the most fundamental law of nature should depend on our entirely subjective experience of time, rather than on the maths?

In effect, the laws are temporally static (as I was hinting obliquely regarding the strangeness of invoking them as the cause of the organised variety in our contingent universe). The article I quote in the OP has shown me the relationship between the acausality of chance and the major philosophical doubt about the causality of the laws – the subject of the next piece, God willing. If chance and necessity are not causes, then what is?

The two are of course closely linked: to quote Eddington again (and maybe to give some further considerations for Richard Wright’s position on a Nature unfolding entirely by God’s initial laws): “It is impossible to trap modern physics into predicting anything with perfect determinism because it deals with probabilities from the outset. “

Eddington refers to quantum indeterminacy, of course, but macro-events are represented by the the sum of of those statistics – and in most cases, by laws, like Maxwell’s, that are also statistical and so, in Maxwell’s own estimation, mere approximations. We’re often fooled, I suspect, by the mathematical precision used in expressing the laws, when nature itself is less precise (hence the mention, above, that only a Platonic coin toss has a 50% probability).

I don’t think any of this risks debunking science – though it does, I think, debunk some of scientists’ common metaphysical assumptions, which have the effect of elevating science above its true scope. So what I’m trying to do is flesh out Joshua Swamidass’s thoughts about science as a defined sphere of knowledge, and point out the limitations of science as a source of final truth. That, I think, leads either to a more legitimate agnosticism, or if that is intellectually unsatisfying, to making clear the need for creation beyond the scope of science. As you rightly observe (in line with both Eddington and Swartz):

That’s roughly where I’ll start the next post, hopefully providing both some scientific and theological hope!